Rohit Swami

Senior Software Engineer

@ElucidataInc

About Me

Senior Software Engineer with 4.5 years building cloud-native products on AWS using Python. Architected low-

latency microservices, scalable data pipelines, and real-time infrastructure with Redis + WebSocket, processing 100k+

biomedical entities per run and cutting KEGG visualization time by 99.9%. Skilled in Docker, Kubernetes, IaC

(Terraform/CloudFormation), and CI/CD automation. Built and deployed GenAI solutions (LLMs + RAG) for

real-time, context-aware search on proprietary data.

Proven mentor and designer, ready to lead a small engineering

team, drive architecture strategy, and accelerate release velocity as a hands-on tech lead.

Let’s connect and turn ideas into impactful solutions together!

personal information

- Full Name

- Rohit Swami

- D.o.b.

- 21 Jan 1999

- address

- Hisar, Haryana, India

- [email protected]

- phone

- +91 80594 59498

specialization

Data Structure & Algorithms Python React Redux Redis WebSocket GenAI LLMs RAG GraphQL R Shiny AWS Docker RESTful APIs Microservices Architecture MySQL MongoDB AWS Batch ECR EC2 Lambda S3 JavaScript Shiny Flask Tableau Jirawork experience

Elucidata

Elucidata

New Delhi, India

Senior Software Engineer

• Optimized KEGG pathway rendering pipeline, reducing full render time from 22 mins to under 1 min

(95.5%+) and UI filter latency from 15 mins to <1 second (99.9%), significantly enhancing user experience.

• Migrated critical graph generation logic from R to Python, achieving 10× faster execution, 80% lower

memory usage, and enabling processing of 100K+ biomedical entities across 1,000+ pathways.

• Single-handedly architected and shipped a high-speed, reusable Redis + WebSocket notification

microservice (AWS EC2, VPC security groups, CI/CD): delivers sub-100ms real-time push updates from any

backend to users, architected to scale to 100K+ notifications per day across multiple production apps.

• Diagnosed and fixed a major memory leak in tooltip rendering, reducing memory consumption by 90%

and preventing Shiny app disconnects on server.

• Built and deployed production-grade GenAI apps leveraging LLMs, RAG, vector databases (FAISS/

Qdrant), and prompt engineering for real-time semantic search and summarization on domain-specific data.

• Developed 10+ interactive dashboards using R Shiny and React, including a data explorer for a Yale

University research project, later published in a peer-reviewed biomedical journal.

• Mentored and led a team of 3 engineers; introduced test automation (85%+ coverage), performance

benchmarking, and code reviews, reducing production issues by 40%.

• Hardened infrastructure security: implemented HIPAA/GDPR-compliant data handling across S3, RDS,

VPC, passing a third-party security audit with zero critical findings.

Ignite Solutions

Ignite Solutions

Pune, India

Software Engineer

• Built demand-forecasting pipelines (Python, Airflow) reducing MAPE by 15% for 500K retail energy meters.

• Modelled 100M credit-card transactions, boosting upsell precision to 90% in pilot region.

• Created Flask-based recruitment portal integrated with Google Workspace, cutting hiring cycle time by 30%.

InterviewBit

InterviewBit

Bengaluru, India

Software Engineer, Intern

• Implemented an end-to-end auto-suggestion tool to find the semantic

similarity between the solution of student’s queries and deployed the model

as a REST-API on AWS EC2 using Flask backend. The proposed system

drastically reduced the query resolution

time of 72% of students from 2 days to 13.3 minutes

(average).

Developed a dashboard to analyse the Key Performance Indicator (KPI) using SQL queries.

• Built an NLP tool to calculate the percentage of code

and text in TA’s responses to

increase the overall interaction of students and TAs.

• Wrote scripts to send emails and migrate 1500+ students from

Flock to open-source platform Mattermost.

• Revamped InterviewBit’s webpages and worked on content creation of Data

Science, Machine Learning and Deep Learning.

upGrad

upGrad

Remote

Data Science, Intern• Fostered 200+ students to improve their skills by providing clear, positive and line-by-line actionable feedback on their submitted projects using upGrad's code review tool for data science courses.

MyGov (MoEIT, India)

MyGov (MoEIT, India)

New Delhi, India

Data Analyst, Intern

• Analyzed sentiments of public on different government policies and

campaigns on social media.

• Exported over 4000 tweets with Twitter API and built a

hybrid solution to classify each tweet as positive or negative

with KNN algorithm.

• Performed data mining operations on websites for various internal

purposes.

MSTC, LPU

MSTC, LPU

LPU, India

Lead Organizer • MSTC host community events to guide professionals in different

technologies. We strive to create a platform where like-minded individuals

come together to share and learn about technology.

• As a lead speaker, I share my knowledge about front-end technologies.

Education

B.Tech (Computer Science & Engineering)

5th - 12th

Publications

International Journal of Emerging Technologies and Innovative Research (www.jetir.org), 5(12), 598-605

ISSN: 2349-5162

Think India Journal, 22(3), 8382-8391

ISSN: 0971-1260

ACM - International Conference Proceedings Series (Jun, 2019)

DOI: 10.1145/3339311.3339356

Blogs

Certifications & Achievements

portfolio

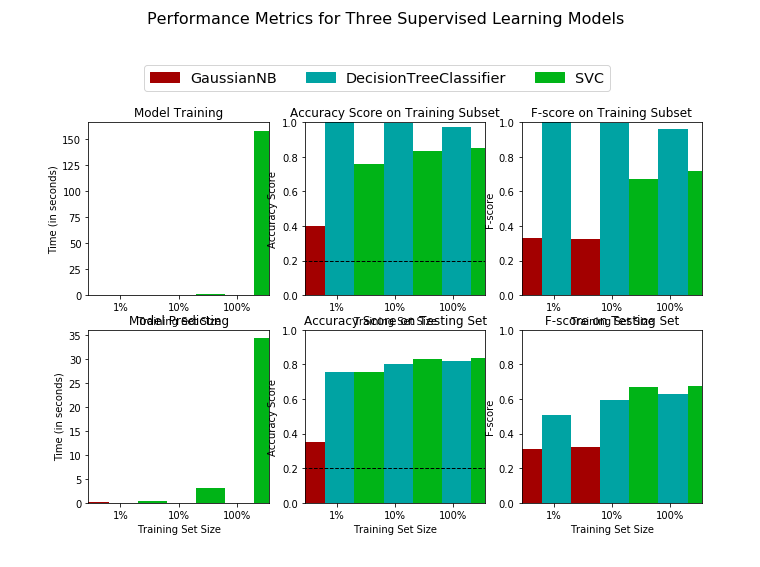

Finding Donors (CharityML)

Finding Donors (CharityML)

- Date:

- 14 Sep 2018

- Site link:

- Jupyter Notebook URL

- Algorithms:

- Gaussian Naive Bayes (GaussianNB), Decision Tree Classifier, C-Support Vector Classification

- Technology:

- Python, Jupyter-Notebook

Problem Statement: Build an algorithm to

best identify potential donors.

• My goal was to evaluate and optimize several different

supervised learners to determine which algorithm will

provide the highest donation yield while also reducing the

total number of letters being sent.

• Implemented a pipeline in Python that will train and

predict on the supervised learning algorithm given. I used

the Grid Search method to tune the parameters of all

algorithms and Gradient Boosting Classifier to extract

features importance.

Indian Paper Currency Prediction

Indian Paper Currency Prediction

- Date:

- June 2020

- Code:

- GitHub Repo

- Demo:

- https://indian-currency-prediction.herokuapp.com/

- Algorithms:

- Convolutional Neural Network (CNN)

- Technology:

- Python, Jupyter-Notebook, Flask

Problem Statement: Build an app to

prediction indian paper currency.

• Collected the images from search engines and trained a

Convolutional Neural Network (CNN) model to predict

the 7 types of Indian paper currency i.e. 10, 20, 50,

100, 200, 500, 2000.

• Deployed the machine learning model on Heroku with

Flask back-end and secured the app with CSRF

protection.

Image Classification with PyTorch (Deep Learning)

Image Classification with PyTorch (Deep Learning)

- Date:

- 13 Nov 2018

- Site link:

- Github Repo URL

- Technology:

- PyTorch Framework, Python, Jupyter-Notebook

Problem Statement: Building a command-line

application to predict flower class along with the

probability.

• AI image classification and machine learning utilizing the

PyTorch framework.

• Used transfer learning on pre-trained architectures

including vgg11, vgg13, vgg16, vgg19, densenet121,

densenet169, densenet161, and densenet201.

• Trained dynamic neural networks in Python with GPU

acceleration with 85% accuracy.

Customer Churn Prediction (PySpark)

Customer Churn Prediction (PySpark)

- Date:

- 09 June 2019

- Site link:

- GitHub Repo URL

- Blog:

- https://medium.com/@rowhitswami/customer-churn-prediction-of-a-music-app-using-pyspark-d65b8f5be047

- Algorithms:

- PySpark, TF-IDF, SDGClassifier

- Technology:

- Python, Jupyter-Notebook

Problem Statement: Customer Churn Prediction

from a Music App Spark

• Used PySpark to analyze the data of a fictional music app

Sparkify to identify the factor affecting the customers who

are most likely to churn.

• Trained machine learning model on IBM Cloud with the

accuracy of 83.87%

StackOverflow Survey '17

StackOverflow Survey '17

- Date:

- 20 Feb 2019

- Site link:

- GitHub Repo URL

- Blog:

- https://medium.com/@rowhitswami/4-crunching-facts-of-stack-overflow-developer-survey-17-441eb082331f

- Algorithms:

- Exploratory Data Analysis

- Technology:

- Python, Jupyter-Notebook

Problem Statement: Analyzing the public data of StackOverflow Survey 2017

• Analyzed the StackOverflow public data of over 64,000

developers around the globe for the year 2017.

• Answered questions like, how education may influence the

salary, gender ratio of developers across the world, the

rate of increase in salary with years of experience and does

more language implies more salary hike in IT sector.

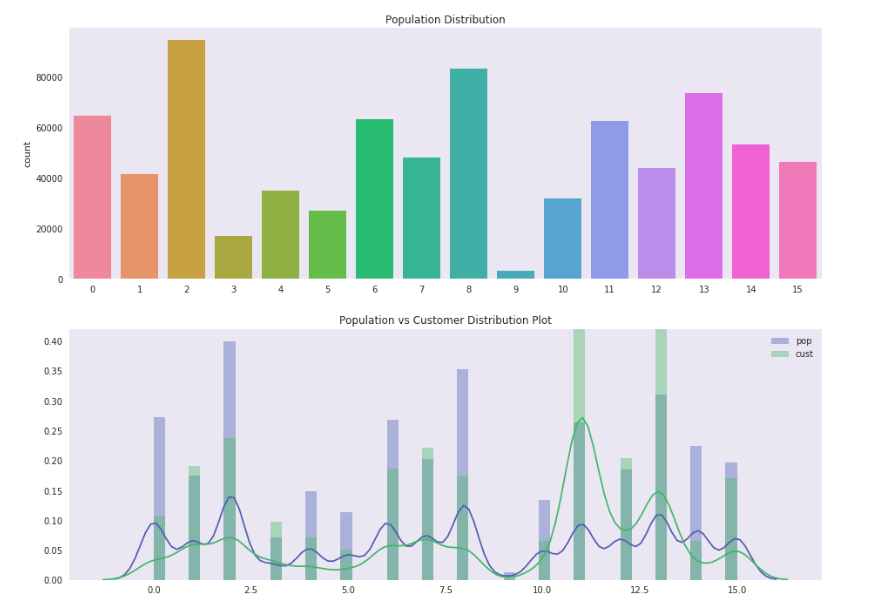

Identify Customer Segments

Identify Customer Segments with Arvato

- Date:

- 24 Dec 2018

- Site link:

- Jupyter Notebook URL

- Algorithms:

- KMeans

- Technology:

- Python, Jupyter-Notebook

Problem Statement: Identify Customer

Segments with Arvato Dataset

• The data and design for this project were provided by

Arvato Financial Services. I applied unsupervised learning

techniques on demographic and spending data for a sample of

German households.

• Preprocessed the data, apply dimensionality reduction

techniques, and implement clustering algorithms to segment

customers with the goal of optimizing customer outreach for

a mail order company.

• The objective was to find relationships between

demographics features, organize the population into

clusters, and see how prevalent customers are in each of the

segments obtained.

Disaster Response Pipeline

Disaster Response Pipeline

- Date:

- 13 Apr 2019

- Site link:

- Github Repo URL

- Technology:

- Python, Jupyter-Notebook

Problem Statement: Building a machine

learning pipeline

• Analyzed disaster data from Figure Eight to build a model

for an API that classifies disaster messages.

• Created a machine learning pipeline to categorize the

events so that it can send the messages to an appropriate

disaster relief agency and deployed as a web app.

IPL Live Updates (Python)

IPL Live Updates (Python)

- Date:

- 3 Apr 2019

- Site link:

- Github Repo URL

- Technology:

- Python

Problem Statement: Get real-time updates of

cricket matches on your desktop

• Get real-time push notifications on your desktop on every

Four, Six and fall of a wicket of Indian Premier League's

matches

• Used an HTTP persistent-connection to extract the live

score from a webpage

ContinentInfo (Python Package)

ContinentInfo (Python Package)

- Date:

- 3 Mar 2019

- Site link:

- PyPi Index URL

- Technology:

- Python

- Installation:

- pip install ContinentInfo

Problem Statement: Get the name of the

continent in which a country is located in.

• Built a pretty simple Python package to understand how

packages work on PyPi.

Shortto.com - URL Shortner

Shortto.com - URL Shortner

- Site link:

- www.shortto.com

- Contributor:

- Soumyajit Dutta, Biswarup Benerjee

- Technology:

- HTML, CSS, JavaScript

A Project made under Microsoft Technical Community LPU

• The project centers around developing an URL shortening

service. You have got long URLs that are hard to remember.

Use

shortTo.com to shorten your URL and give them an easy to

remember

URL.

• Hosted on AWS, we used Flask in the backend. The

bootstrap

framework and javascript with media queries are used to make

the UX/UI even more responsive and interactive.

Twitter Sentiment Analysis (Hadoop)

Twitter Sentiment Analysis using Hadoop

- Date:

- Oct 2017 - Mar 2018

- Client:

- Personal

- Technology:

- Hadoop, Flume, Hive, Twitter Streaming API, Python

Twitter, one of the largest social media site receives tweets in millions every day. This huge amount of raw data can be used for industrial or business purpose by organizing according to our requirement and processing. This project provides a way of sentiment analysis using hadoop which will process the huge amount of data on a hadoop cluster faster in real time

Hacktoberfest Status Checker

Hacktoberfest Status Checker

- Date:

- Oct 2017

- Site link:

- https://www.openstalk.tech/

- Technology:

- HTML, CSS, JavaScript, Bootstrap, Git REST API v3

- Contributor:

- See Complete List

• Hacktoberfest is a month-long celebration of open source

software

in partnership with Github, in which participants need to

make

4 Pull Request across the Github.

• Hacktoberfest Status Checker is an open-source tool

to know

the status of your Hacktoberfest activities in the month of

October.

Data Analysis (Freelancing)

Data Analysis (Freelancing)

- Date:

- 24 Apr 2018

- Site link:

- GitHub Repo

- Client:

- Lcodi56

- Technology:

- Python

Sales data was given in CSV format and the task of this project was to derive valuable insights from the raw data, like:

• Which product was most sold?

• What payment modes were used for purchasing products?

• What is the most common payment method for the United

States?

• What was the earliest time of the day a transaction

occurred?

• Were there repeat customers? Discuss possible issues.

Smart Q-Labs

Smart Q-Labs

- Date:

- Sep 2017

- Site link:

- GitHub Repo

- Technology:

- HTML, CSS, jQuery, PHP, Java

- Platform:

- Web, PWA, Android

- Contributor

- Shriom Tripathi, Soumyajit Dutta, Biswarup Benerjee

• Smart Q Labs is a dynamic queue management solution which

take

care of your queue number and gives you notification time by

time. Not only that we provide analytics for the outlets so

that

they can manage as well as enjoy managing queue.

• I designed the mobile website using the concept of

PWA (Progressive

Web Apps), specially designed to work in the offline mode or

bad network connection. PWA uses modern web capabilities to

deliver

an app-like experience to users. It uses the app-shell model

to provide app-style navigation and interactions.

Data Analysis (Freelancing)

Data Analysis (Freelancing)

- Date:

- 04 May 2018

- Site link:

- GitHub Repo

- Client:

- Lcodi56

- Technology:

- Python

The dataset contains information of people die from "Diabetes Mellitus" between 1999-2015. The task of this project was to derive valuable insights from the raw data, like:

• In what state has the most deaths occurred?

• Over what period (start and end) was the data

collected?

• What were the total number of deaths for 2006?

• What state had the least deaths in 2001?

• How many people die from "Diabetes mellitus" over the

entire

reporting period?

contact me

Scan QR code to chat now